Airflow On Aws EKS

“Running Airflow on Amazon EKS with EC2 OnDemand & Spot Instances”

Hello Readers,

Before we go in-depth about the technicality of the topic let me introduce myself.

I am Kunal Shah, AWS Certified Solutions Architect, helping clients to achieve optimal solutions on the cloud. Cloud Enabler by choice, having 6+ Years of experience in the IT industry.

I love to talk about Cloud Technology, Digital Transformation, Analytics, DevOps, Operational efficiency, Cost Optimization, Cloud Networking & Security.

You can reach out to me @ linkedin.com/in/kunal-shah07

This is my first blog! Finally Woohoo…{You can imagine the excitement :P}

“Sometimes too late is just in time” #IYKYK

let's get started with deploying AIRFLOW ON AWS EKS smoothly. Literally, I mean it !! :)

AIRFLOW -Airflow is a platform to programmatically author, schedule, and monitor workflows. Airflow is a platform that lets you build and run workflows. A workflow is represented as a DAG (a Directed Acyclic Graph) and contains individual pieces of work called Tasks, arranged with dependencies.

Features – Scalable, Dynamic, Extensible, Elegant, Easy to use.

Core Components – Scheduler, Executor, DAG, Webserver, Metadata Database

The best part of Airflow & Kubernetes is it's an Open-Source Tool.

EKS -Amazon Elastic Kubernetes Service (Amazon EKS) is a managed service that you can use to run Kubernetes on AWS without needing to install, operate, and maintain your own Kubernetes control plane or nodes.

Kubernetes is an open-source system for automating the deployment, scaling, and management of containerized applications

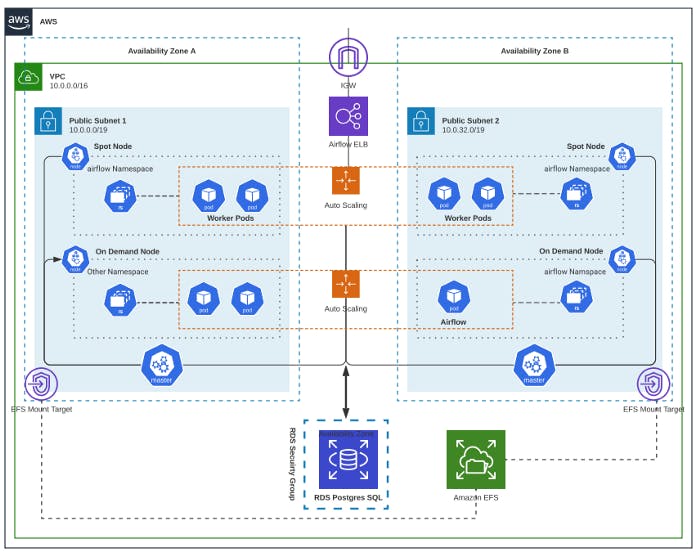

ARCHITECTURE

PREREQUISITE -

Your local machine with git bash/cmd OR Amazon Linux EC2. I am going with T2.MICRO Amazon EC2 ( Free Tier Eligible ) “tried & tested”

DEPLOYMENT-

EC2 (Bastion Host) instance for deploying the AWS EKS cluster & communicating with the EKS cluster using kubectl.

Once EC2 is deployed we will install below mentioned dependent packages on it -

AWS CLI version 2 — docs.aws.amazon.com/cli/latest/userguide/cl..

eksctl — docs.aws.amazon.com/eks/latest/userguide/ek..

kubectl — docs.aws.amazon.com/eks/latest/userguide/in..

Docker — docs.aws.amazon.com/AmazonECS/latest/develo..

Helm — eksworkshop.com/beginner/060_helm/helm_intr..

git — yum install git

IMP: Get your Access Key & Secret Key generated & configured on AWS EC2.

We need to add some env variables according to the environment - Let’s start by setting a few environment variables:

AOK_AWS_REGION=us-east-1 #<-- Change this to match your region AOK_ACCOUNT_ID=$(aws sts get-caller-identity --query 'Account' --output text) AOK_EKS_CLUSTER_NAME=Airflow-on-Kubernetes

Now we have to deploy the EKS cluster using eksctl command-line utility.

Download / Clone repo on Amazon EC2 Linux:

github.com/Kunal-Shah107/AIRFLOW-ON-AWS-EKS

{REPO with all necessary notes for this demo/poc}

Now run the below command on Amazon Linux EC2:

eksctl create cluster -f ekscluster.yaml

(Note — ekscluster.yaml is the file in which we define the parameters of NodeGroups & Instance Types)

This will create SG, EKS Cluster (ControlPlane), Node Groups, Instance Roles.

Once the cluster is deployed through eksctl (In the background it creates 3 cloud formation templates to deploy resources-1. EKS Cluster, 2. OnDemand NodeGroup, 3. SpotNodeGroup)

Be kind as it will take 15–20 mins to set up the entire cluster Infra.

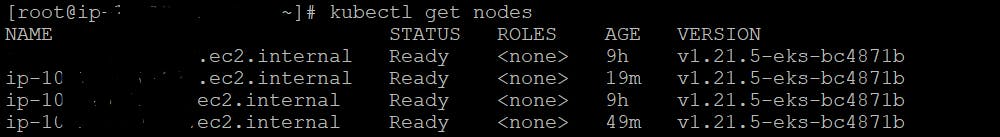

we can now cross-check the nodes, services running in the cluster from AWS console & CLI.

- kubectl get nodes

- kubectl get pods -n airflow { airflow is your namespace }

- kubectl get nodes — label-columns=lifecycle — selector=lifecycle=Ec2Spot

- kubectl get nodes — label-columns=lifecycle — selector=lifecycle=OnDemand

AWS EKS Cluster will be publicly accessible.

This cluster will have two managed node groups:

On-Demand node group that will run the pods for Airflow web-UI and scheduler.

Spot-backed node group to run workflow tasks.

Then we have to set up infra through the git repo provided by AWS (clone). We have to change the parameters as per our requirements.

git clone github.com/aws-samples/airflow-for-amazon-e..

{This repo has all folders & parameters for airflow on eks setup}

cd airflow-for-amazon-eks-blog/scripts

. ./setup_infra.sh

From the setup infra, we get the following infra deployed: PostgreSQL DB, Auto-Scaling Group, IAM role, IAM Policies, EFS, Access Points, EIP, EC2s & SGs.

Now start docker services & then we will have to use this Docker Image to create pods in Node Groups.

service docker start

Build and push the Airflow container image to the ECR repository that we created earlier as part of the environment setup:

aws ecr get-login-password \ — region $AOK_AWS_REGION | \ docker login \ — username AWS \ — password-stdin \ $AOK_AIRFLOW_REPOSITORY

docker build -t $AOK_AIRFLOW_REPOSITORY .

docker push ${AOK_AIRFLOW_REPOSITORY}:latest

Once the docker image is pushed to AWS ECR we can now deploy the pods.

cd ../kube {change the directory to kube}

./deploy.sh {execute the deploy.sh}

The script will create the following Kubernetes resources:

Namespace: airflow

- Secret: airflow-secrets

- ConfigMap: airflow-configmap

- Deployment: airflow

- Service: airflow

- Storage class: airflow-sc

- Persistent volume: airflow-dags, airflow-logs

- Persistent volume claim: airflow-dags, airflow-logs

- Service account: airflow

- Role: airflow

Role binding: airflow

The script will complete when pods are in a running state.

This script will deploy a classic load balancer which will have targets as nodes in which pods are running.

Pods are built from the Docker Image which we created above.

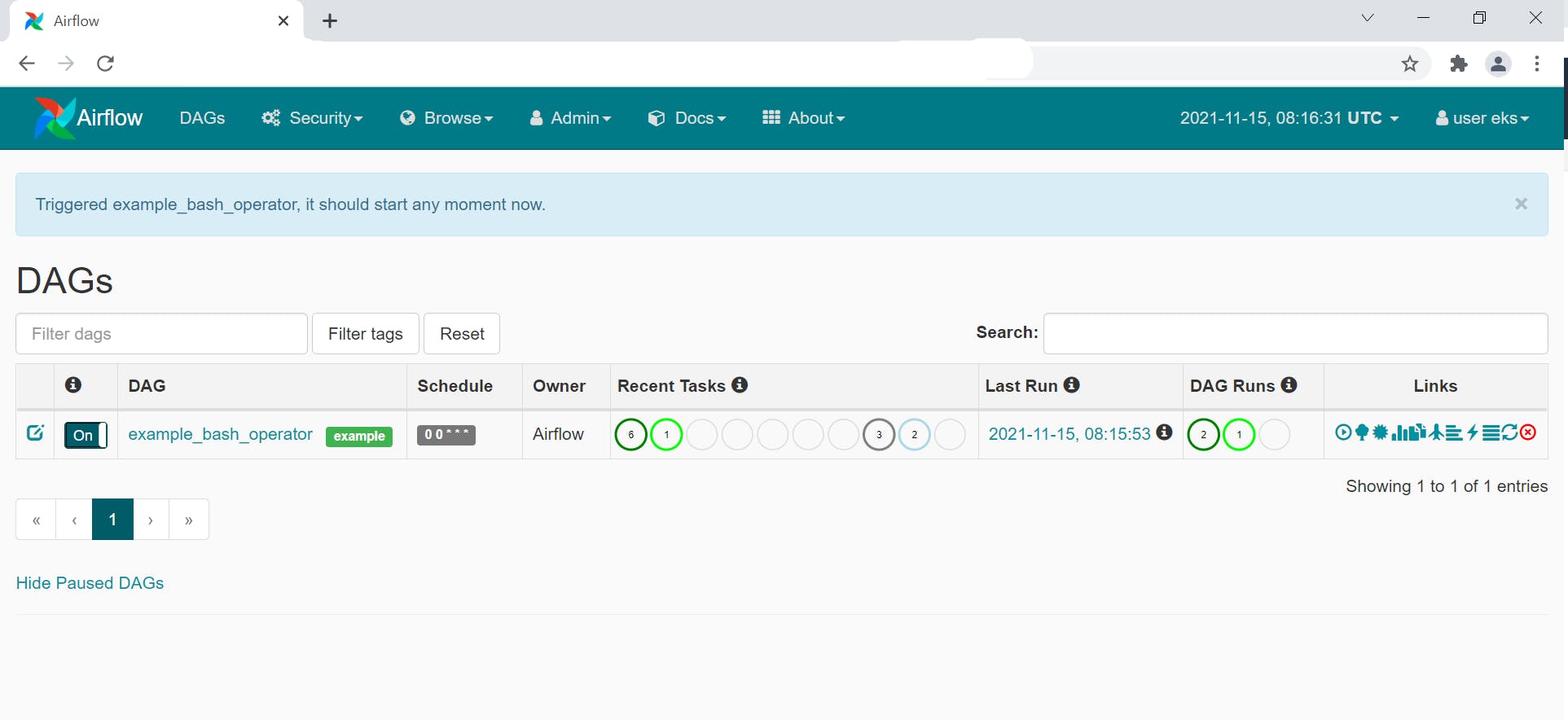

Airflow Walkthrough:

Let’s login to the Airflow web UI, and trigger a sample workflow that we have included in the demo code. Obtain the DNS name of the Airflow web-server:

echo "http://$(kubectl get service airflow -n airflow \ -o jsonpath="{.status.loadBalancer.ingress[].hostname}"):8080\login"

Log into the Airflow dashboard using username: eksuser and password: ekspassword (Go ahead & change the password as soon as you log in)

FINALLY - AIRFLOW IS UP & RUNNING

DISCLAIMER- This DEMO/POC will incur some charges for RDS, EFS, EKS. So please make sure to clean up the environment once done.

CLEANUP COMMANDS ARE THERE ON THE MENTIONED REPO:

github.com/Kunal-Shah107/AIRFLOW-ON-AWS-EKS..

SOLUTION REFERENCE- aws.amazon.com/blogs/containers/running-air..

I know it's a bit technical & lengthy that too in my first blog :(

But had fun deploying Airflow manually as it's cost-efficient, Highly scalable for a production-level run as compared to MWAA.

THANK YOU FOR YOUR PATIENCE THROUGH ALL THIS :)

“As they say, Good things come to those who wait”